Cloud hosting resources might be expensive especially in the case when you are forced to pay for the resources that you don’t require. On the other hand, it is also true that resource shortages might result in downtimes. In this situation, what can the developers do? This article will provide you with 5 easy ways through which you will only have to pay for the resources that you actually use; and at the same time you will not be limited or restricted to provide the necessary resources to your application as its capacity grows.

Here are the 5 important ways that will stop you from overpaying for your cloud environment:

Accept That You Are Overpaying For The VMs

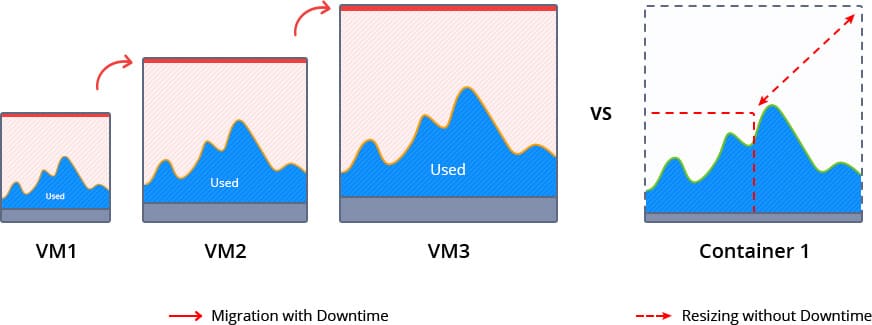

Every cloud hosting provider gives you the ability to choose from different VM sizes. Selecting the perfect VM size can be a difficult task. If the size of the VM is too small, you might encounter performance issues or downtimes during the peak hours. If the resources are over-allocated, then during the times of normal load or ideal time periods a large portion of the resources will be unused and eventually wasted.

Additionally, if you wish to add a few more resources to the existing VM, the only option you have is to double the VM size. You might have to face a downtime when you move forward by stopping the current VM. The VM has to be stopped for executing all the necessary steps for redeploying or migrating the application and then you can further deal with the other inevitable challenges. If this situation looks similar to your current cloud environment, then you need to look for a more viable solution.

How To Scale Up And Down Efficiently

Vertical scaling is a great way to optimize the CPU and memory usage of any instance in accordance with its current load. If vertical scaling is configured appropriately, it works perfectly with monolithic and microservices.

Implementing vertical scaling in a VM by adding or removing the resources on the fly without causing any downtime is a difficult task. VM technologies enable memory ballooning, but this process is not fully automated. This process requires tooling in order to monitor the memory pressure in the host and guest operating system (OS) and then initiating up or down scaling processes, whichever is appropriate. However, this technique does not work well in reality because the memory sharing process has to be automatic for ensuring maximum efficiency.

The container technology provides a supreme level of flexibility as it enables automatic resource sharing within the containers on the same host with the help of ‘cgroups’. Resources which are not consumed within the set limit boundaries are automatically shared with the other containers operating on the same hardware node. Just like the VMs, resource limits for containers can be easily scaled without the need of rebooting the running instances.

Migration From VMs To Containers

It is a common myth that containers are only suitable for the greenfield applications, by greenfield we mean microservices and cloud-native applications. The user experiences and cases prove that there is a possibility to migrate the existing workloads from VMs to containers and there is no need to rewrite or redesign the applications.

For the legacy and monolithic applications, it is advisable to use system containers so that the architecture can be reused along with the configuration that was executed in the original VM. Make use of the standard network configurations like

- Multicast

- Run several processes inside a container

- Stay away from any issues

- Avoid determining incorrect memory limits

- Use the local file system to write

- Keep the local file system safe during container restart

- Troubleshoot issues

- Easily analyze logs in an already existing way

- Make use of various configuration tools based on SSH

In order to start the migration of your application from VM to a container, it is important to prepare the necessary container images. In case of system containers, this process might be a little complicated as compared to the application containers. So you can either choose to build it yourself or you can make use of an orchestrator like MilesWeb’s pre-configured system container templates.

Every application component must be placed inside an isolated container. This method will simplify the application topology as you might find that some specific parts of the project become insignificant within a new architecture.

Enable Garbage Collector With Memory Shrink

In order to scale Java vertically the use of containers alone is not sufficient, it is also important to configure the Java VM perfectly. You must make sure that the garbage collector that you have selected provides memory shrinking at execution time.

A garbage collector (GC) with memory shrink accumulates all the live objects together, removes the garbage objects and releases the unused memory back to the operation system. On the other hand, in case of a non-memory shrinking garbage collector or a non-optimal Java VM start options, the Java applications accumulate all the committed RAM and vertical scaling is not possible in accordance with the application load. Unfortunately the garbage collector provided by Java Development Kit (JDK) 8 – (XX:+UseParallelGC) does not shrink and it also doesn’t solve the issue of insufficient RAM usage by Java VM. Fortunately an easy solution to this is switching to Garbage-First (-XX:+UseG1GC).

The two parameters mentioned below can configure the vertical scaling of memory resources:

- set Xms – a scaling step

- set Xmx – a maximum scaling limit

Also, it is important that the application should invoke full garbage control even during a low load or idle stage. This process can be executed inside the application logic or it can be automated with the help of an external garbage collector (GC) agent.

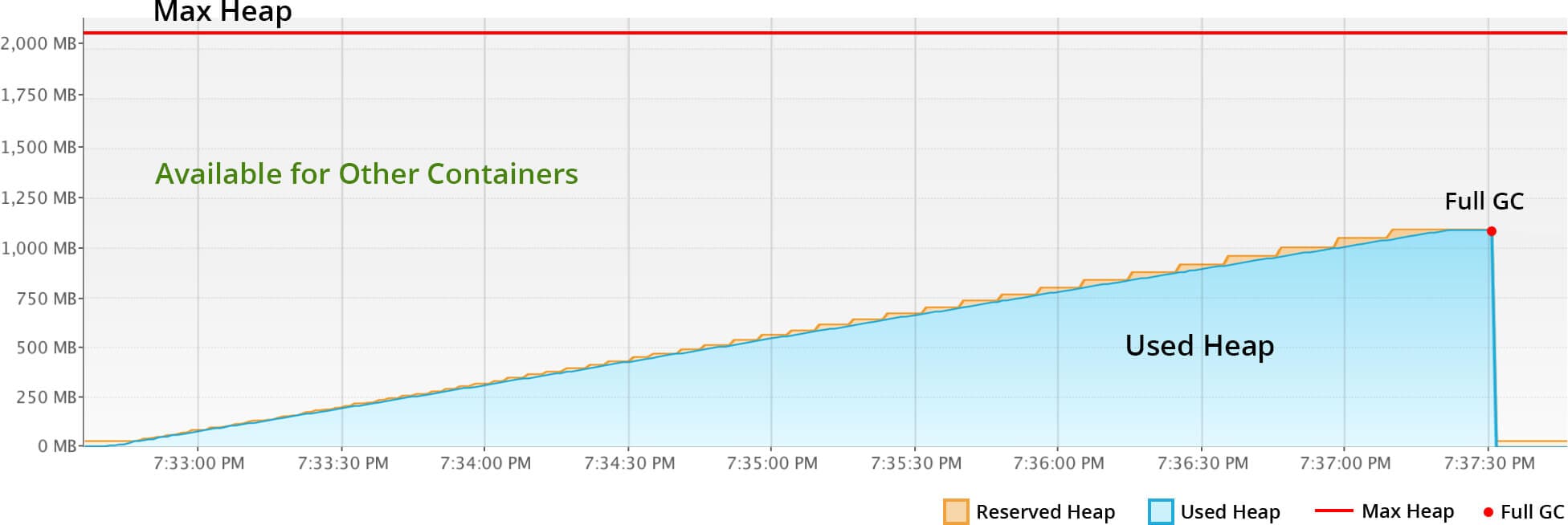

The graph shown below shows the result of activating the Java VM start options along with delta time growth of approximately 300 seconds:

-XX:+UseG1GC -Xmx2g -Xms32m

As seen in the graph above, the reserved RAM denoted in the orange color slowly increases corresponding to the real usage growth – shown in blue color. All the unused resources present in the Max Heap Limits are available for consumption by the other containers or for the processes running in the same host. The most important point is that the unused resources are not wasted by remaining idle.

Select A Cloud With ‘The Pay As You Use’ Concept

Hosting on a cloud is quite frequently compared to high electricity usage as it provides resources as per the demand of your website or application and works on the ‘pay as you go’ model. However, a major point of difference is that your electricity bill is not doubled when power consumption is a little more than usual.

‘Pay As You Go’

Most of the cloud hosting vendors provide the ‘pay as you go’ billing model and this means that you can start with a smaller machine and then add more servers to your platform as the project expands. However, as discussed above, it is difficult to select the size that is perfect for your current requirements and will scale with your demands without causing any downtimes. Therefore, you keep paying for the limits – initially for a small machine, then for a machine double in size and ultimately you also pay for horizontal scaling performed for various underutilized VMs.

‘Pay As You Use’

In contrast to the ‘pay as you go’ concept, the ‘pay as you use’ billing approach takes into consideration the load on the application at the present time and increases or decreases the resources on the fly, this is made possible because of the container technology. As a result, you are only charged for the resources that you have actually consumed and there is no need for you to make any complex re-configurations in order to scale up.

It is beneficial to adopt the pay as you use model as it:

- Eliminates the performance issues

- Ensures affordability

- Avoids unnecessary complexity with unplanned implementation of horizontal scaling

- Decreases the cloud spends irrespective of the type of the application

- Avoids resource wastage and ensures optimum utilization of available resources